Projects

- Ongoing

TranSpace 3.0 : Open XR platform

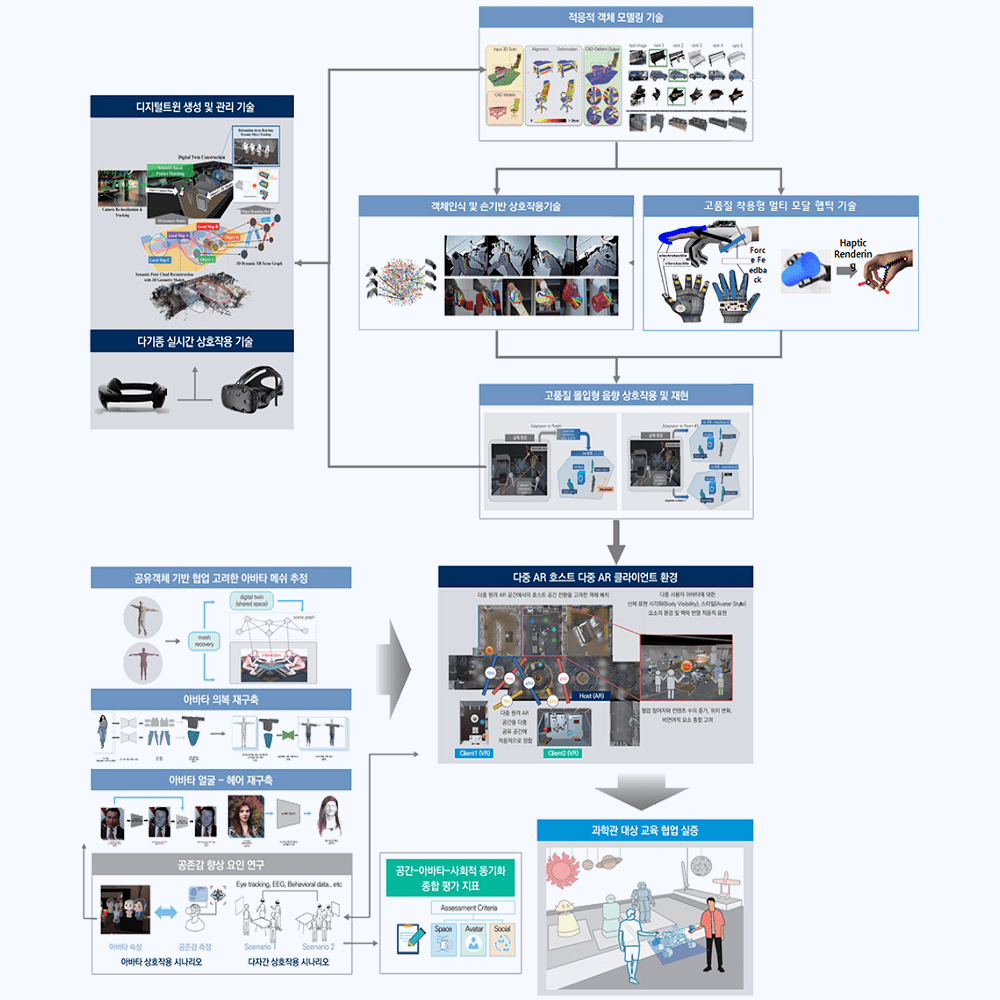

This research aims to develop an open XR collaboration platform that induces high-quality immersion and social co-presence to enhance user collaboration quality. The platform is based on Scene Graph and utilizes digital twins to manage collaborative interactions of spatial models, static/dynamic objects, sound, hand gestures, and haptic elements through functions like creation, deletion, movement, and transformation. The study focuses on developing high-quality immersive elements and enhancing social co-presence by emphasizing various technologies.

The research covers the composition and integration of 3D objects in the spatial domain, optimal alignment of scanned data and CAD models, sound reproduction techniques, application of hand gestures using reinforcement learning in a physics simulator, high-quality multi-modal haptic technology supporting wearable interfaces, avatar mesh-point cloud interaction, force-based interaction, deep learning models for 3D clothing reconstruction, adaptive spatial configuration between multiple VR clients and single AR hosts, CG avatar face and costume reconstruction with texture extraction, as well as the development of evaluation metrics and factors for enhancing social co-presence in XR.

Open platform for XR collaboration, Authoring for XR contents, XR techniques for high immersion, XR smart construction, XR silver health care

The expected impact of this study lies in securing XR collaboration space service computing platform technology to prepare for future environmental changes in science and technology. On a national and societal level, it aims to provide immersive experiences similar to face-to-face interactions in an untact environment, resolving educational disparities and offering efficient remote collaboration environments to improve the quality of life for citizens. Moreover, by leveraging open platform technologies, XR can be introduced to various industries, contributing to the rapid growth of the XR market.

.

This research was supported by National Research Council of Science and Technology(NST) funded by the Ministry of Science and ICT(MSIT), Republic of Korea(Grant No. CRC 21011).

-

Dooyoung Kim, Woontack Woo "Edge-Centric Space Rescaling with Redirected Walking for Dissimilar Physical-Virtual Space Registration", 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR) 10.1109/ISMAR59233.2023.00098 Conf. Int.

-

Hail Song, Boram Yoon, Woojin Cho, Woontack Woo "RC-SMPL : Real-time Cumulative SMPL-based Avatar Generation", 2023 IEEE International Symposium on Mixed and Augmented Reality 10.1109/ISMAR59233.2023.00023 Conf. Int.

-

Seoyoung Kang, Hail Song, Boram Yoon, Kangsoo Kim, Woontack Woo "Effects of Different Facial Blendshape Combinations on Social Presence for Avatar-mediated Mixed Reality Remote Communication", 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) 10.1109/ISMAR-Adjunct60411.2023.00094 Conf. Int.

-

Boram Yoon, Jae-eun Shin, Hyung-il Kim, Seo Young Oh, Dooyoung Kim, Woontack Woo "Effects of Avatar Transparency on Social Presence in Task-Centric Mixed Reality Remote Collaboration", IEEE Transactions on Visualization and Computer Graphics ( Volume: 29, Issue: 11, November 2023) 10.1109/TVCG.2023.3320258 Journal Int.

Dynamic Digital Twin for Realistic Untact XR Collaboration

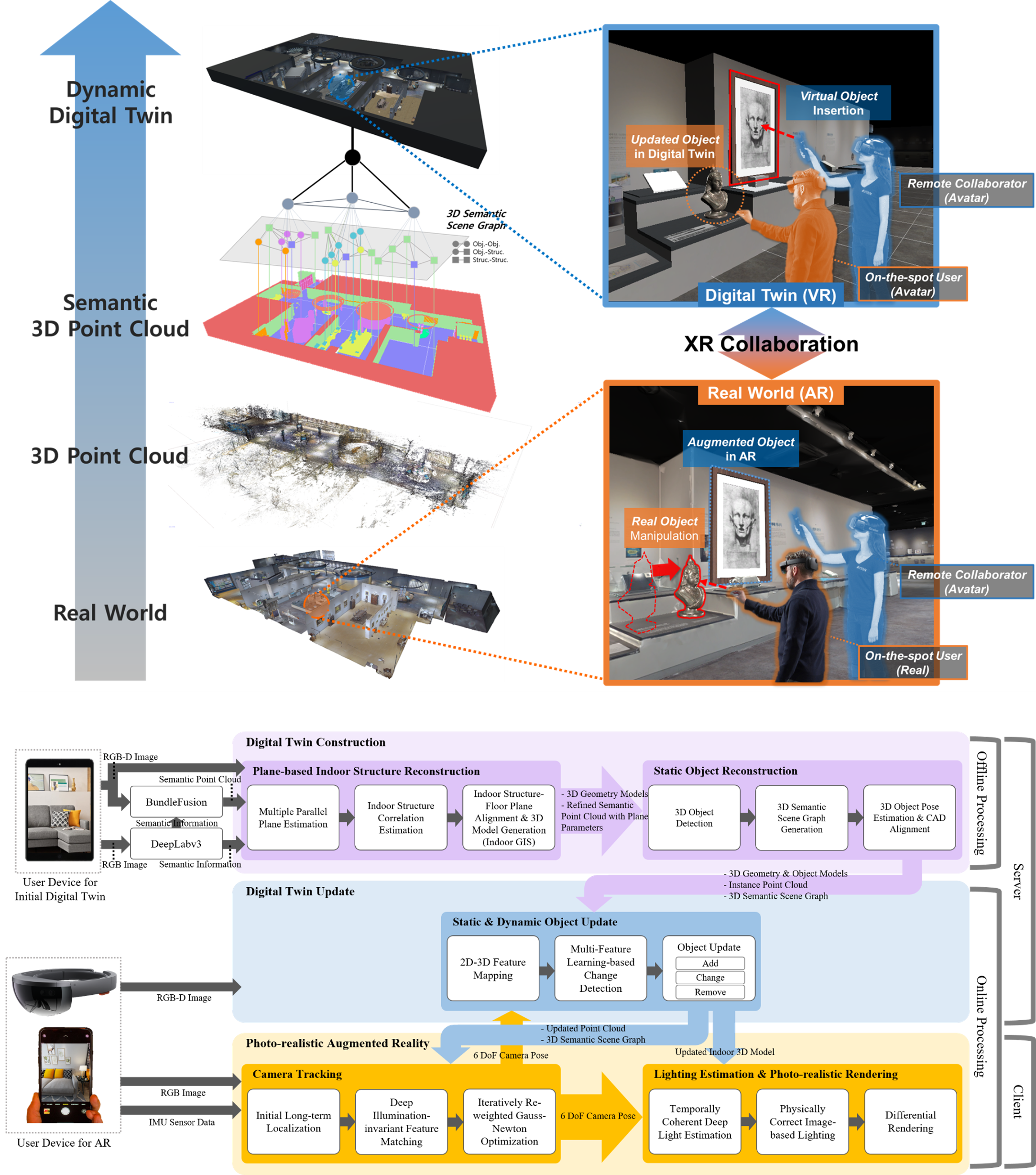

In this project, we study a scene graph-based 3D scene reconstruction method for the dynamic digital twin. The dynamic digital twin track and update dynamic movement of physical objects in the indoor environment in real-time. In addition, the project studies XR interworking technology in which users in the field and remote users experience and manipulate the same environment directly or indirectly through realistic rendering-based augmented and virtual reality. Finally, this project will enable various realistic non-face-to-face collaboration applications. To implement this system, we intend to organically converge specialized technologies in various fields, such as computer vision, computer graphics, and indoor GIS.

Digital twin, Scene Graph, SLAM, Scene Reconstruction, Object Detection and Tracking , Semantic Segmentation, AR, VR

The contribution of the proposed project keeps the virtual environment of the digital twin in an identical state to the dynamic indoor environment. Through the proposed project, non-face-to-face collaboration services based on digital twin technology can be utilized in a dynamic environment. The map and object information generated by this project can be linked to the digital twin based on the semantic relationship between the indoor space and the object to efficiently respond to changes such as object movement and indoor space change. The expected results from this project are expected to be used in various fields related to non-face-to-face collaboration, such as e-commerce, teleconferencing, education, games, museums, plays, and concerts.

An integrated XR platform provides a synchronized 3D semantic map with an indoor environment to multiple AR / VR devices for the digital twin. This integrated platform allows AR/VR devices to interact with physical environments, including moving objects.

This work was supported by the National Research Foundation of Korea(NRF) grant funded by the Korea government(MSIT) (No. 2021R1A2C2011459).

WISE AR UI/UX Platform

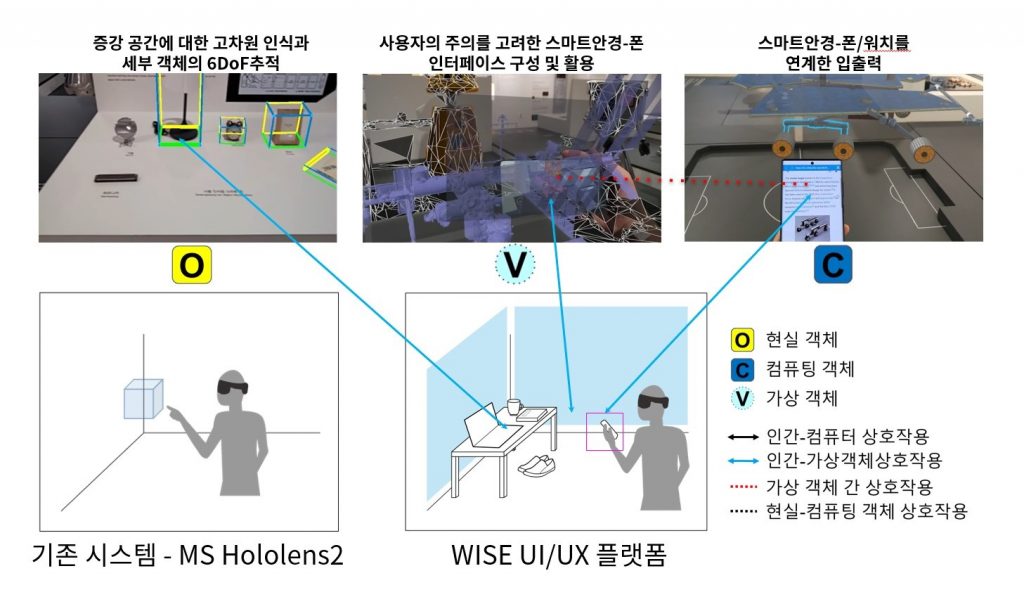

For the effective application of smart glasses to everyday life, anyone can use them intuitively, reflect surrounding situations and individual characteristics, and ultimately research and development of WISE (Wearable Interface for Sustainable Enhancement) beyond the functional use of the interface. Dynamic interfaces reflecting the overall state of the user’s attention and intention, all surrounding spaces and objects are recognized as semantic objects to access related information, and the object is used as an input tool for each characteristic.

Dynamic interface technology based on user intent for multi-task situations, Parameterization of everyday objects and development of input recognition and object control modules based on multi-user intentions, A technique for estimating the user’s global position in any indoor space, Integrated WISE AR UI/UX Platform for Smart Glasses

The short-term goal is to disclose the WISE UI/UX platform developed through this project and the module technologies that make up the platform as open SW so that it can be used in industry and academia. In addition, we look forward to the development of the latest ICT fields such as augmented, virtual reality and metaverse through the industry-academic activities of excellent personnel who participated in the research and development of this project. Ultimately, projects and services using augmented reality are made easier for various stakeholders, such as individual producers, small-scale project teams at home and abroad, and mobile communication/manufacturing/education/distribution companies.

An integrated WISE UI/UX platform that makes smart glasses “magically” easy and useful anywhere indoors and outdoors. It is a dynamic interface that reflects the overall state, including the user’s intention. It can recognize both surrounding space and objects as semantic objects.

This research is supported by Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government(MSIT) (No.2019-0-01270, WISE AR UI/UX Platform Development for Smartglasses)

-

Eunhwa Song, Taewook Ha, Junhyeok Park, Hyunjin Lee, Woontack Woo "Holistic Quantified-Self: An Integrated User Model for AR Glass Users", 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) 10.1109/ISMAR-Adjunct60411.2023.00092 Conf. Int.

-

Hui-Shyong Yeo, Erwin Wu, Daehwa Kim, Juyoung Lee, Hyung-il Kim, Seo Young Oh, Luna Takagi, Woontack Woo, Hideki Koike, Aaron John Quigley "OmniSense: Exploring Novel Input Sensing and Interaction Techniques on Mobile Device with an Omni-Directional Camera", Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems 10.1145/3544548.3580747[Video ] Conf. Int.

-

Hyunjin Lee, Woontack Woo "Exploring the Effects of Augmented Reality Notification Type and Placement in AR HMD while Walking", 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR) 10.1109/VR55154.2023.00067 Conf. Int.

-

Sunyoung Bang, Woontack Woo "Enhancing the Reading Experience on AR HMDs by Using Smartphones as Assistive Displays", 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR) 10.1109/VR55154.2023.00053 Conf. Int.

-

Eunhwa Song, Minju Baeck, Jihyeon Lee, Seo Young Oh, Dooyoung Kim, Woontack Woo, Jeongmi Lee, Sang Ho Yoon "Memo:me, an AR Sticky Note With Priority-Based Color Transition and On-Time Reminder", 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) 10.1109/VRW58643.2023.00126 Conf. Int.

A-museum : Art Museum XR Twin

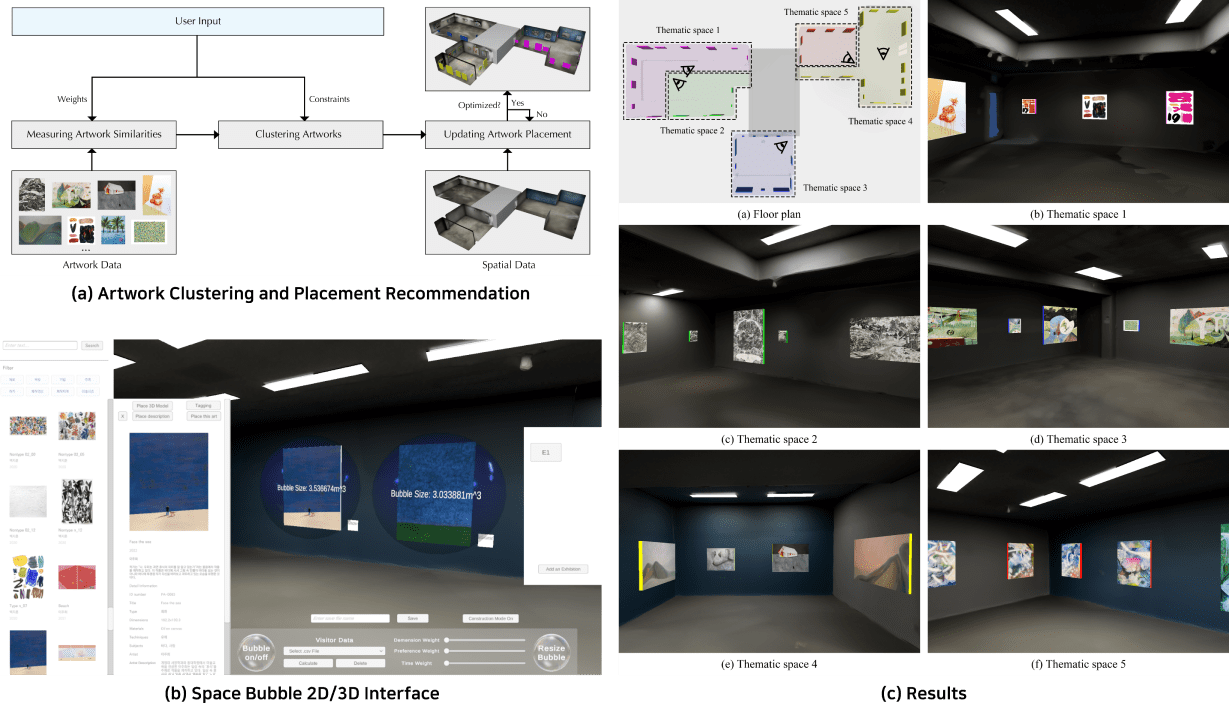

Our primary objective is to develop an XR Twin-based Space-telling Authoring Tool tailored for art museum curators. This tool aims to empower curators with the capability to virtually curate exhibitions by replicating exhibition spaces and arranging artworks within a virtual environment. To achieve this, our approach encompasses cutting-edge visualization, data transformation, simulation, and visitor information analysis technologies, all meticulously integrated to streamline pre-planning and enable online showcasing. Through this cultural technology research, our ultimate goal is to optimize exhibition curation practices and significantly enhance the overall museum experience.

Artwork Recommendation, Sorting, and Placement Technology based on Artwork Metadata, Exhibition Space Information, Curatorial Narrative, and Visitor Data

- Empowering curators with the capability to create virtual exhibitions and conduct simulations in a digital twin environment

- Facilitating artwork recommendation, placement recommendation, visitor path recommendation, and curatorial narrative creation by leveraging a wide range of contextual elements, including artwork metadata, exhibition space information, curatorial narrative, and visitor data

- Offering a user-friendly and immersive authoring interaction, ensuring a seamless and convenient exhibition creation experience

We present a virtual exhibition system that automatically optimizes artwork placements in thematic space layouts. Artworks are clustered based on five content factors: color, material, description, artist, and production date. Curators can adjust their importance and cluster sizes according to their design goals. A genetic optimization algorithm is used to determine artwork placement, evaluating spatial characteristics with four cost functions: intra-cluster distance, inter-cluster distance, intra-cluster intervisibility, and occupancy. Additionally, we have developed the “Bubble” element to ensure minimum distances between works based on visitor data, prioritizing safety and comfort. Moreover, curators have the flexibility to fine-tune space arrangements by adjusting default values. We are also developing an interface for collaborative authoring with generative AI to create curatorial narratives, referred to as Space-telling content, suitable for XR twin-based art museum environments. Through the chain method, we aim to simplify content generation and enhance the authoring experience by fostering strong user-AI cooperation.

This research is supported by the Ministry of Culture, Sports and Tourism and Korea Creative Content Agency(Project Number: R2021080001)

-

Hayun Kim, Maryam Shakeri, Jae-eun Shin, Woontack Woo "Space-Adaptive Artwork Placement Based on Content Similarities for Curating Thematic Spaces in a Virtual Museum", ACM Journal on Computing and Cultural Heritage https://doi.org/10.1145/3631134 Journal Int.

-

우운택, 김하연, 마리암 샤케리 "가상 박물관에서 예술 작품의 콘텐츠 유사성에 기반한 공간 적응형 자동 배치 방법 및 그 시스템", [출원번호] 10-2023-0100227 Patent KR

-

Hyerim Park, Maryam Shakeri, Ikbeom Jeon, Jangyoon Kim, Abolghasem Sadeghi-Niaraki, Woontack Woo "Spatial transition management for improving outdoor cinematic augmented reality experience of the TV show", Springer Virtual Reality 10.1007/s10055-021-00617-z Journal Int.

Projects

- Past

Human reconstruction for telepresent interaction-This research was supported by Next-Generation Information Computing Development Program through the National Research Foundation of Korea(NRF) funded by the Ministry of Science, ICT (NRF- 2017M3C4A7066316)., 2022.10~2023.11

글로벌 스트리트뷰 및 공간정보 기반 360도 VR콘텐츠 저작도구 플랫폼 개발-과학기술정보통신부/정보통신·방송연구개발사업, 2019.07~2020.12

초실감 원격가상 인터랙션을 위한 사용자 복원 기술-미래창조과학부/차세대정보컴퓨팅기술개발사업, 2017.09~2020.12

글로벌 스트리트뷰 및 공간정보 기반 360도 VR콘텐츠 저작도구 플랫폼 개발-과학기술정보통신부/정보통신·방송연구개발사업, 2019.07~2020.12

초실감 원격가상 인터랙션을 위한 사용자 복원 기술-미래창조과학부/차세대정보컴퓨팅기술개발사업, 2017.09~2020.12